Seven Sketches in Compositionality(416)

By David Spivak and Brendan Fong [1].

Chapter 1: Generative Effects(86)

More than the sum of their parts(6)

A first look at generative effects(3)

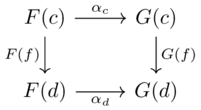

Central theme of category theory: study of structures and structure-preserving maps.

Asking which aspects of structure one wants to preserve becomes the question "what category are you working in?".

Example: there are many functions \(\mathbb{R} \xrightarrow{f} \mathbb{R}\), which we can think of observations (rather than view \(x\) directly we only view \(f(x)\)). Only some preserve the order of numbers, only some preserve distances between numbers.

The less structure that is preserved by our observation of a system, the more ’surprises’ when we observe its operations - call these generative effects.

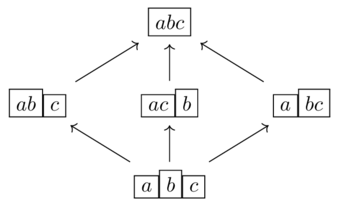

Consider a world of systems which are points which may or may not be connected. There are 5 partitionings or systems of three points.

Suppose Alice makes observations on systems with a function \(\phi\) which returns whether or not points are connected. Alice also has an operation on two systems called join which puts two points in the same partition if they are connected in either of the original systems.

Alice’s operation is not preserved by the join operation.

Application: Alice is trying to address a possible contagion and needs to know whether or not it is safe to have each region extract their data and then aggregate vs aggregating data and then extracting from it.

Exercise 1-1(2)

Give an example and non-example for

an order-preserving function

a metric-preserving function

an addition-preserving function

Solution(1)

Order-preserving \(x+1\), non-order-preserving \(-x\)

Metric preserving \(x+1\), non-metric-preserving \(2x\)

Addition-preserving \(2x\), non-addition-preserving \(x^2\)

Linked by

Ordering systems(3)

The operation of joining systems earlier can be derived from a more basic structure: order.

Let \(A \leq B\) be defined as a relationship that holds when \(\forall x,y:\ (x,y) \in A \implies (x,y) \in B\)

The joined system \(A \lor B\) is the smallest system that is bigger than both \(A\) and \(B\).

The possibility of a generative effect is captured in the inequality \(\phi(A) \lor \phi(B) \leq \phi(A \lor B)\), where \(\phi\) was defined earlier.

There was a generative effect because there exist systems violate this (both are individually false for \(\phi\) but not when put together).

\(\phi\) preserves order but not join

Exercise 1-7(2)

Using the order \(false \leq true\) for \(\mathbb{B}\), what is:

\(true \lor false\)

\(false \lor true\)

\(true \lor true\)

\(false \lor false\)

Solution(1)

This is same as logical or: \(true,\ true,\ true,\ false\)

What is order(11)

Review of sets, relations functions(1)

Basic review of set, subset, partition, equivalence relation.

A partition of a set is a surjective map to the parts.

Any \(a \in A\) can be thought of as a function \(\{1\} \xrightarrow{a} A\)

Exercise 1-16(2)

Suppose \(A\) is a partitioned set and \(P,Q\) are two partitions such that for each \(p \in P\) there exists a \(q \in Q\) with \(A_p=A_q\)

Show that for each \(p \in P\) there is at most one \(q \in Q\) such that \(A_p = A_q\)

Show that for each \(q \in Q\) there is a \(p \in P\) such that \(A_p = A_q\)

Solution(1)

Suppose \(q' \ne q\). If they are both equal to \(A_p\) then they are equal to each other, but a partition rule is that \(q' \ne q\) must have an empty intersection (and \(A_p\) cannot be empty by the other rule).

By part 1, the mapping between part labels is a bijection, so there is an inverse map as well.

Exercise 1-20(2)

Finish proof Proposition 1.19. Suppose that \(\sim\) is an equivalence relation on a set A, and let P be the set of \(\sim\)-closed and \(\sim\)-connected subsets.

Show that each part \(A_p\) is nonempty

Show that \(p \ne q \implies A_p \cap A_q = \varnothing\)

Show that \(A = \bigcup_{p \in P} A_p\)

Proof(1)

Part of the definition of \(\sim\)-connected is being nonempty

Suppose \(a \in A\) is in the intersection. Then \(a \sim p\) and \(a \sim q\) for some elements \(p \not\sim q\) arbitrarily selected from \(A_p, A_q\). But this is impossible because \(\sim\) is transitive, so this must be impossible.

Every \(a \in A\) is part of some equivalence class which is a \(\sim\)-closed and \(\sim\)-connected set, so \(A \subseteq \bigcup_{p \in P} A_p\)

The equivalence class is \(\sim\)-closed because two elements being \(\sim\)-related implies they are in the same equivalence class.

The equivalence class is \(\sim\)-connected because equivalence classes are nonempty and the equivalence relation is transitive.

The constituents of \(A_p\) were defined to be subsets of \(A\), so unioning these will also be a subset of \(A\), i.e. \(\bigcup_{p \in P} A_p \subseteq A\).

Therefore \(A = \bigcup_{p \in P} A_p\).

Function(1)

A function from \(S\) to \(T\)

A relation \(F \subseteq S \times T\) such that \(\forall s \in S:\ \exists! t \in T:\ (s,t) \in F\)

The preimage of an element \(t \in T\) is \(\{s \in S\ |\ F(s)=t\}\)

\(\hookrightarrow\) Injectivity: \(s\ne s' \implies F(s)\ne F(s')\)

\(\twoheadrightarrow\) Surjectivity: \(\forall t \in T:\ \exists s \in S:\ (s,t) \in F\)

\(\xrightarrow \cong\) Bijectivity: both injectivity and surjectivity.

Linked by

Partition(1)

A partition of set \(A\)

A set \(P\) (‘part labels’) and, for each \(p \in P\), a nonempty subset (‘pth part’) \(A_p \subseteq A\) such that:

\(A = \bigcup_{p \in P}A_p\)

\(p \ne q \implies A_p \cap A_q = \varnothing\)

Two partitions are considered the same if the partitioned groups are the same, the labels don’t matter.

Linked by

Partitions are equivalences(2)

There is a bijection between ways to partition a set \(A\) and the equivalence relations on \(A\)

Proof(1)

Every partition gives rise to a distinct equivalence relation

Define \(a \sim b\) to mean \(a,b\) are in the same part. This is a reflective, symmetric, and transitive relation given the definition of a partition.

Every equivalence relation gives rise to a distinct partition.

Define a subset \(X \subseteq A\) as \(\sim\)-closed if, for every \(x \in X\) and \(x' \sim x\), we have \(x' \in X\).

Define a subset \(X \subseteq A\) as \(\sim\)-connected if it is nonempty and \(\forall x,y \in X:\ x \sim y\)

The parts corresponding to \(\sim\) are precisely the \(\sim\)-closed and \(\sim\)-connected subsets.

Linked by

Quotient(1)

A quotient of a set under an equivalence relation.

This is equivalent to the parts of the partition associated with the equivalence relation.

Linked by

Relation(1)

A relation between sets \(X\) and \(Y\)

A subset \(R \subseteq X \times Y\).

A binary relation on \(X\) is a subset of \(X \times X\)

Linked by

Preorders(10)

Preorders are just equivalence relations without the symmetric condition.

Every set can be considered as a discrete preorder with the binary relation of equality. Also the trivial opposite (codiscrete preorder) where all pairs are in the relation.

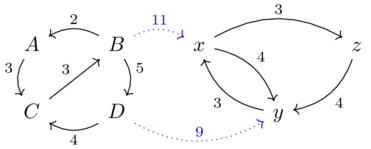

Every graph yields a preorder on the vertices where \(v \leq w\) iff there is a path from \(v\) to \(w\).

Reflexive because of length-0 paths, transitive because of path concatenation.

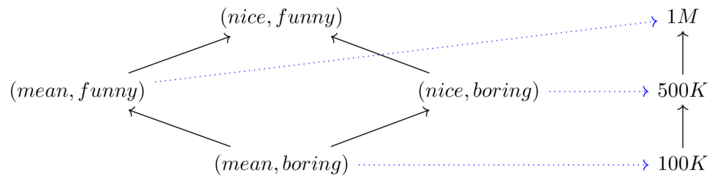

Product of two preorders can be considered as a preorder by only comparing things when both preorders independently agree on the pairs.

Preorder(1)

A preorder / partial order / total order relation on a set \(X\)

Preorder: a binary relation on \(X\) that is reflexive and transitive.

A partial order (poset) has the additional constraint that \(x \leq y \land y \leq x \implies x=y\)

We can always get a partial order from a preorder by quotienting, so it’s not that special.

A total order has all elements comparable.

Linked by

- Exercise 1-55: referenced

- Opposite preorder: referenced

- Skeletality: referenced

- Upper set: referenced

- Monotone maps: referenced

- Monotone maps: referenced

- Monotone map: referenced

- Exercise 1-66: referenced

- Identities are monotone: referenced

- Exercise 1-85: referenced

- Meets of subsets: referenced

- Galois connection: referenced

- Adjoint functor theorem for preorders: referenced

- Bool-category: referenced

- Preorders are Bool-categories: referenced

- Proof: referenced

- Proof: referenced

- Exercise 2-67: referenced

- Exercise 2-67: referenced

- Solution: referenced

- Galois connections are adjoint: referenced

- Adjoint examples: referenced

- Exercise 3-81: referenced

- Solution: referenced

- Exercise 3-88: referenced

- Exercise 3-90: referenced

- Can we build it: referenced

- Can we build it: referenced

- Can we build it: referenced

- Feasibility relationships as Bool-profunctors: referenced

- Feasilibiliy relation: referenced

- Feasilibiliy relation: referenced

- Monoidal categories: referenced

Exercise 1-53(2)

For any set \(S\) there is a coarsest partition having just one part.

What surjective function does this correspond to?

(Likewise for the finest partition?)

Solution(1)

The trivial map to \(\{1\}\) and the identity, respectively.

Exercise 1-55(2)

Prove that the upper sets on a discrete preorder for some set \(X\) is simply the power set \(P(X)\)

Solution(1)

The upper set criterion is satisfied by any subset, thus all possible subsets are upper sets.

The binary relation is equality, thus the upper subset criterion becomes \(p \in U \land p = q \implies q \in U\) or alternatively \(p \in U \implies p \in U\) which is always satisfied.

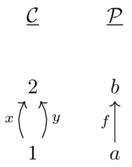

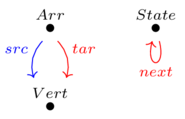

Graph(1)

A graph (of vertices, arrows)

A tuple \(G=(V, A, s, t)\)

Set of vertices and arrows, with two functions \(A\rightarrow V\) which say where the source and target of each arrow goes to.

A path in \(G\) is any sequence of arrows such that the target of one arrow is the source of the next (including length 0 and 1 sequences).

Linked by

Opposite preorder(1)

An opposite preorder

Given a preorder \((P, \leq)\), we define \(p \leq^{op} q \iff q \leq p\)

Linked by

Skeletality(1)

Categorical skeletality generally means \(x \cong y \implies x = y\)

E.g. a skeletal preorder is a poset.

Linked by

Upper set(1)

An upper set in \(P\) for some preorder \((P, \leq)\)

A subset \(U\) of \(P\) satisfying the condition \(p \in U \land p \leq q \implies q \in U\)

Anything you add to the upper set means you have to add everything greater than it.

Example: the possible upper sets of \(Bool\) are \(\{\varnothing, \{true\}, \{true, false\}\}\)

Linked by

Monotone maps(15)

Category theory emphasizes that preorders themselves (each a minature world, composed of many relationships) can be related to one another.

Monotone maps are important because they are the right notion of structure-preserving map for preorders.

The map (‘cardinality’) sending a power-set (with inclusion ordering) to the natural numbers with standard ordering is a monotone map.

Given a preorder, the inclusion map of the upper sets of \(P\) (ordered by inclusion) to the power set of \(P\) (ordered by inclusion) is a monotone map.

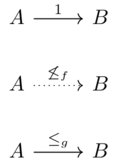

Monotone map(1)

A monotone map between preorders \((A, \leq_A), (B, \leq_B)\)

A function \(A \xrightarrow{f} B\) such that \(\forall x,y \in A: x \leq_A y \implies f(x) \leq_B f(y)\)

Linked by

- Monotone maps: referenced

- Monotone maps: referenced

- Monotone maps: referenced

- Preorder isomorphism: referenced

- Preorder isomorphism: referenced

- Pullback along monotone: referenced

- Exercise 1-66: referenced

- Exercise 1-67: referenced

- Solution: referenced

- Identities are monotone: referenced

- Proof: referenced

- Monotones to bool: referenced

- Proof: referenced

- Back to observations and generative effects: referenced

- Monotone maps that preserve meets: referenced

- Generative effect: referenced

- Exercise 1-94: referenced

- Galois connection: referenced

- Total order galois connections: referenced

- Total order galois connections: referenced

- Basic theory of Galois connections: referenced

- Basic theory of Galois connections: referenced

- Galois connection alternate form: referenced

- Adjoints preserving meets and joins: referenced

- Proof: referenced

- Adjoint functor theorem for preorders: referenced

- Adjoint functor theorem for preorders: referenced

- Proof: referenced

- Adjoints in Set: referenced

- Closure operator: referenced

- Eval as closure: referenced

- Adjunctions from closures: referenced

- Exercise 1-119: referenced

- Solution: referenced

- Monoidal monotone: referenced

- Exercise 2-44: referenced

- Proof: referenced

- Proof: referenced

- Bool-functors: referenced

- SMP currying: referenced

- Exercise 2-82: referenced

- Exercise 2-82: referenced

- Exercise 2-82: referenced

- Solution: referenced

- Solution: referenced

- Proof: referenced

- Functor between preorders: referenced

- Functor between preorders: referenced

- Natural transformations between sequences: referenced

- Natural isomorphism of Bool-categories and preorders: referenced

- Feasibility relationships as Bool-profunctors: referenced

- Feasibility relationships as Bool-profunctors: referenced

- Feasilibiliy relation: referenced

- Solution: referenced

- Companion and conjoint of addition: referenced

Dagger preorder(1)

A dagger preorder

\(q \leq p \iff p \leq q\) - this is tantamount to an equivalence relation.

Linked by

Preorder isomorphism(1)

A preorder isomorphism

A monotone map for which there exists an inverse monotone map (\(f;g=id\) and \(g;f = id\))

If this exists, we say the preorders involved are isomorphic.

Linked by

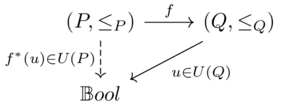

Pullback along monotone(1)

A pullback along a monotone map \(P \xrightarrow{f} Q\)

We take the preimage of \(f\), but not for a single element of \(Q\) but rather an upper set of \(Q\).

Noting that upper sets are monotone maps to Bool, it follows that the result of a pullback is an upper set in \(P\) follows from the fact that composition preserves monotonicity.

Therefore the type of the pullback is \(U(Q) \xrightarrow{f^*} U(P)\)

Linked by

Exercise 1-66(2)

Let \((P, \leq)\) be a preorder and recall the opposite preorder.

Show that the set \(\uparrow(p) := \{p' \in P\ |\ p \leq p'\}\) is an upper set for any \[p \in P\]

Show that this construction defines a monotone map \((P, \leq^{op}) \xrightarrow{\uparrow} U(P)\)

Show that \(p \leq p' \iff \uparrow(p') \subseteq \uparrow(p)\)

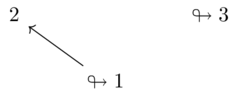

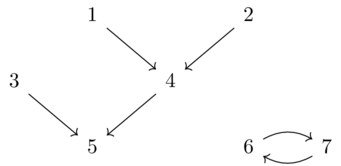

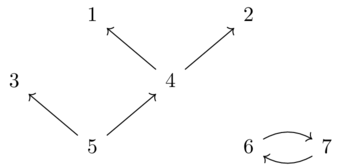

Draw a picture of the map \(\uparrow\) in the case where \(P\) is the preorder \((b\geq a \leq c)\).

Solution(1)

This is the Yoneda lemma for preorders (up to equivalence, to know an element is the same as knowing its upper set).

This is basically the definition an upper set starting at some element.

Interpreting the meaning of the preorder in the domain and codomain of \(\uparrow\), this boils down to showing \(p \leq p'\) implies \(\uparrow(p') \subseteq \uparrow(p)\) - This is shown by noting that \(p' \in \uparrow(p)\) and anything ‘above’ \(p'\) (i.e. \(\uparrow(p')\)) will therefore be in \(\uparrow(p)\).

Forward direction has been shown above - The other direction is shown just by noting that \(p\prime\) must be an element of \(\uparrow(p\prime)\) and by the subset relation also in \(\uparrow(p')\), therefore \(p \leq p'\).

Exercise 1-67(2)

Show that when \(P\) is a discrete preorder, then every function \(P \rightarrow Q\) is a monotone map, regardless of the order \(\leq_Q\).

Solution(1)

The only time the monotonicity criterion is deployed is when two elements of \(P\) are related, and for a discrete category this means we only have to check whether \(f(a) \leq_Q f(a)\), which is true because preorders are reflexive.

Exercise 1-73(2)

Recall skeletal preorders and dagger preorders.

Show that a skeletal dagger preorder is just a discrete preorder (and hence can be identified with a set)

Solution(1)

Because preorders are reflexive, we just have to show \(a \ne b \implies a \not\leq b\), or its contrapositive: \(a \leq b \implies a = b\).

\(a \leq b \overset{dagger}{\implies} b \leq a \overset{skeletal}{\implies} a = b\)

Identities are monotone(2)

For any preorder, the identity function is a monotone map.

The composition of two monotone maps (\(P \xrightarrow{f} Q \xrightarrow{g} R\)) is also monotone.

Proof(1)

Monotonicity translates to \(a \leq b \implies a \leq b\) and is trivially true.

Need to show: \(a \leq_P b \implies g(f(a)) \leq_R g(f(b))\)

The monotonicity of \(f\) gives us \(f(a) \leq_Q f(b)\) and the monotonicity of \(g\) gives us the result we need.

Monotones to bool(2)

Let \(P\) be a preorder. Monotone maps \(P \rightarrow \mathbb{B}\) are in one-to-one correspondance with upper sets of \(P\).

Proof(1)

Let \(P \xrightarrow{f} \mathbb{B}\) be a monotone map. The subset \(f^{-1}(true)\) is an upper set.

Suppose \(p \in f^{-1}(true)\) and \(p \leq q\).

Then \(true = f(p) \leq f(q)\) because \(f\) is monotonic.

But there is nothing strictly greater than \(true\) in \(\mathbb{B}\), so \(f(q) = true\) and therefore \(q \in f^{-1}(true)\) too.

Suppose \(U \in U(P)\), and define \(P\xrightarrow{f_U}\mathbb{B}\) such that \(f_U=true \iff p \in U\)

Given there are only two values in \(B\) and an arbitrary \(p\leq q\), the only way for this to not be monotone is for \(f_U(p) \land \neg f_U(q)\) but this defies the definition of an upper set.

The two constructions are mutually inverse.

Linked by

Meets and joins(14)

Definition and basic examples(9)

There could be multiple meets/joins, but the definition forces them to be isomorphic.

An arbitrary preorder need not have a meet nor join.

E.g a two element discrete preorder has no overall meet/join, because the meet must be less/greater than or equal to both elements in the set.

Exercise 1-85(2)

Let \(p \in P\) be an element in a preorder. Consider \(A = \{p\}\)

Show that \(\wedge A \cong p\)

Show that if \(P\) is a partial order, then \(\wedge A = p\)

Are the analogous facts true when \(\wedge\) is replaced by \(\vee\)?

Solution(1)

The first condition of the meet gives us that \(\wedge A \leq p\).

The second condition is that \(\forall q \in P: q \leq p \implies q \leq \wedge A\).

Substituting \(p\) in for \(q\), the antecedent holds such that we get \(p \leq \wedge A\).

Therefore \(p \cong \wedge A\)

The difference between a partial order and a preorder is that congruent elements are equal, so we directly get that \(p = \wedge A\)

Yes, the argument is perfectly symmetric.

Meet and join(1)

For a preorder \((P, \leq)\), the meet and join of \(A \subseteq P\).

The meet \(\wedge A\) is an element such that

\(\forall a \in A: \wedge A \leq a\)

\(\forall q \in P: (\forall a \in A: q \leq a) \implies q \leq \wedge A\)

Think of as a GREATEST LOWER BOUND

The join \(\vee A\) is an element such that

\(\forall a \in A: a \leq \vee A\)

\(\forall q \in P: (\forall a \in A: a \leq q) \implies \vee A \leq q\)

Think of as a LEAST UPPER BOUND

Linked by

- Solution: referenced

- Meets and joins of bool: referenced

- Meets and joins of bool: referenced

- Meets and joins of powerset: referenced

- Meets and joins of powerset: referenced

- Meets and joins of total order: referenced

- Meets and joins of total order: referenced

- Meets of subsets: referenced

- Back to observations and generative effects: referenced

- Back to observations and generative effects: referenced

- Solution: referenced

- Solution: referenced

- Proof: referenced

- Basic theory of Galois connections: referenced

- Adjoints preserving meets and joins: referenced

- Adjoints preserving meets and joins: referenced

- Adjoints preserving meets and joins: referenced

- Adjoints preserving meets and joins: referenced

- Adjoints preserving meets and joins: referenced

- Proof: referenced

- Adjoint functor theorem for preorders: referenced

- Adjoint functor theorem for preorders: referenced

- Adjoint functor theorem for preorders: referenced

- Proof: referenced

- Proof: referenced

- Proof: referenced

- Proof: referenced

- Quantales: referenced

- Quantale: referenced

- Quantale: referenced

- Cost quantale: referenced

- Cost quantale: referenced

- Cost quantale: referenced

- All joins implies all meets: referenced

- All joins implies all meets: referenced

- Proof: referenced

- Proof: referenced

- Proof: referenced

- Proof: referenced

- Proof: referenced

- Proof: referenced

- Proof: referenced

- Proof: referenced

- Quantale is SMP with all joins: referenced

- Quantale is SMP with all joins: referenced

- Proof: referenced

- Exercise 2-92: referenced

- Solution: referenced

- Solution: referenced

- Exercise 3-88: referenced

Meets and joins of bool(1)

Meets and joins of total order(1)

In a total order, the meet of a set is its infimum, while the join is the supremum.

Note that \(\mathbb{B}\) is a total order, to generalize Example 1.88.

Meets of subsets(2)

Suppose \((P,\leq)\) is a preorder and \(A \subseteq B \subseteq P\) are subsets that have meets. Then \(\bigwedge B \leq \bigwedge A\)

Solution(1)

Let \(m = \bigwedge A\) and \(n = \bigwedge B\).

For any \(a \in A\) we also have \(a \in B\), so \(n \leq A\) because \(n\) is a lower bound for \(B\).

Thus \(n\) is also a lower bound for \(A\) and hence \(n \leq m\) because \(m\) is \(A\)’s greatest lower bound.

Linked by

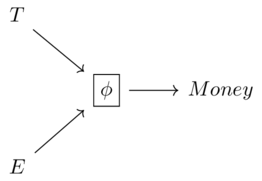

Back to observations and generative effects(5)

We are comparing the observation of a combined system or the combination of observations.

The other direction, restricting an observation of a system to a subsystem, does not have problems for monotone maps (which preserve meets, not joins).

Monotone maps that preserve meets(1)

A monotone map \(P \xrightarrow{f} Q\) that preserves meets

\(f(a \land_P b) \cong f(a) \land_Q f(b)\)

Likewise, to preserve joins is for \(f(a \lor_P b) \cong f(a) \lor_Q f(b)\)

Generative effect(1)

A monotone map \(P \xrightarrow{f} Q\) has a generative effect

\(\exists a,b \in P: f(a) \lor f(b) \not\cong f(a \lor v)\)

Linked by

Exercise 1-94(2)

Prove that for any monotone map \(P \xrightarrow{f} Q\):

if there exist \(a \lor b \in P\) and \(f(a) \lor f(b) \in Q\):

\(f(a) \lor_Q f(b) \leq f(a \lor_P b)\)

Solution(1)

Let’s abbreviate \(f(a\ \lor_P\ b)\) as \(JF\) (join-first) and \(f(b)\ \lor_Q\ f(a)\) as \(JL\) (join-last)

This exercise is to show that \(JL \leq JF\)

The property of joins gives us, in \(P\), that \(a\ \leq\ (a \lor b)\) and \(b\ \leq\ (a \lor b)\)

Monotonicity then gives us, in \(Q\), that \(f(a) \leq JF\) and \(f(b) \leq JF\)

We also know from the property of joins, in \(Q\), that \(f(a) \leq JL\) and \(f(b) \leq JL\)

The only way that \(JF\) could be strictly smaller than \(JL\), given that both are \(\geq f(a)\) and \(\geq f(b)\) is for \(f(a) \leq JF < JL\) and \(f(b) \leq JF < JL\)

But, \(JL \in Q\) is the smallest thing (or equal to it) that is greater than \(f(a)\) and \(f(b)\), so this situation is not possible.

Galois connections(30)

Definition and examples(5)

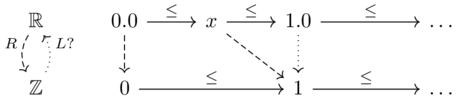

Exercise 1-101(2)

Does \(\mathbb{R}\xrightarrow{\lceil x/3 \rceil}\mathbb{Z}\) have a left adjoint \(\mathbb{Z} \xrightarrow{L} \mathbb{R}\)? If not, why? If so, does its left adjoint have a left adjoint?

Solution(1)

Assume we have an arbitrary left adjoint, \(L\).

For \(x\) as it approaches \(0.0 \in \mathbb{R}\) from the right, we have \(R(x) \leq 1\), therefore \(L(1) \leq x\) because \(L\) is left adjoint.

Therefore \(L(1)\leq 0.0\), yet this implies \(R(0.0) \leq 1\).

This contradicts \(R(0.0)=0\), therefore no left adjoint exists.

Floor and ceil(1)

Consider the map \(\mathbb{Z} \xrightarrow{3z} \mathbb{R}\) which sends an integer to \(3z\) in the reals.

To find a left adjoint for this map, we write \(\lceil r \rceil\) for the smallest natural above \(r \in \mathbb{R}\) and \(\lfloor r \rfloor\) for the largest integer below \(r \in \mathbb{R}\)

The left adjoint is \(\lceil r/3 \rceil\)

Check: \(\lceil x/3 \rceil \leq y\) \(\iff x \leq 3y\)

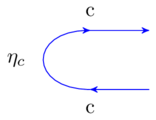

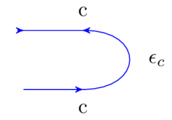

Galois connection(1)

A Galois connection between preorders \(P\) and \(Q\), and the left and right adjoints of a Galois connection

A pair of monotone maps \(P \xrightarrow{f} Q\) and \(Q \xrightarrow{g} P\) such that:

\(f(p) \leq q \iff p \leq g(q)\)

\(f\) is left adjoint and \(g\) is right adjoint of the Galois connection.

Linked by

- Exercise 1-101: referenced

- Floor and ceil: referenced

- Total order galois connections: referenced

- Total order galois connections: referenced

- Back to partitions: referenced

- Back to partitions: referenced

- Basic theory of Galois connections: referenced

- Galois connection alternate form: referenced

- Galois connection alternate form: referenced

- Proof: referenced

- Proof: referenced

- Proof: referenced

- Adjoint functor theorem for preorders: referenced

- Adjoint functor theorem for preorders: referenced

- Proof: referenced

- Proof: referenced

- Proof: referenced

- Adjoints in Set: referenced

- Adjoints in Set: referenced

- Exercise 1-110: referenced

- Exercise 1-110: referenced

- Solution: referenced

- Closure operators: referenced

- Adjunctions from closures: referenced

- Adjunctions from closures: referenced

- Exercise 1-119: referenced

- Solution: referenced

- Preorder of relations: referenced

- Preorder of relations: referenced

- Preorder of relations: referenced

- Exercise 1-125: referenced

- Proof: referenced

- Proof: referenced

- Solution: referenced

- Proof: referenced

- Galois connections are adjoint: referenced

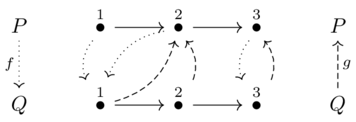

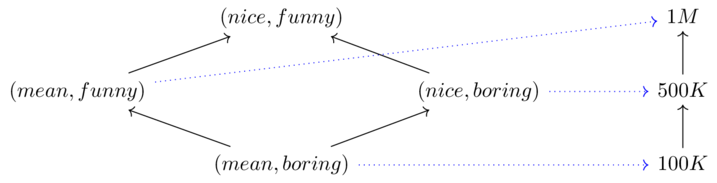

Total order galois connections(1)

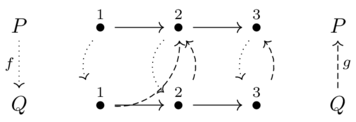

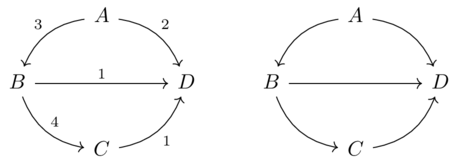

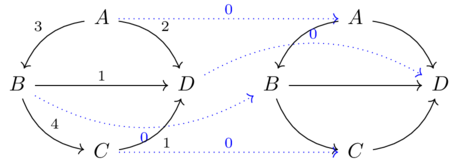

Consider the total orders \(P = Q = \underline{3}\) with the following monotone maps:

These do form a Galois connection

These maps do not form a Galois connection:

These do not because of \(p=2, q = 1\)

\(f(p)=2 \not \leq q=1\) which is not the same as \(p = 1 \leq g(q)=2\)

In some sense that can be formalized, for total orders the notion of Galois connection corresponds to the maps not ‘crossing over’.

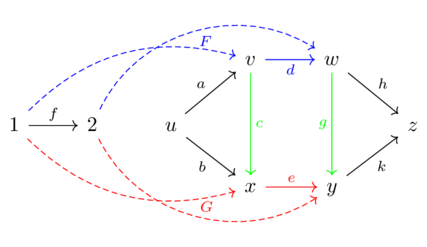

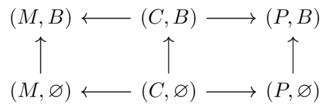

Back to partitions(3)

Given any function \(S \xrightarrow{g} T\), we can induce a Galois connection \(Prt(S) \leftrightarrows Prt(T)\) between the sets of partitions of the domain and codomain.

Determine the left adjoint \(Prt(S) \xrightarrow{g_!} Prt(T)\)

Starting with a given partition in \(S\), obtain a partition in \(T\) by saying two elements, \(t_1,t_2\) are in the same partition if \(\exists s_1 \sim s_2: g(s_1)=t_1 \land g(s_2)=t_2\)

This is not necessarily a transitive relation, so take the transitive closure.

Determine the right adjoint \(Prt(T) \xrightarrow{g^*} Prt(S)\)

Given a partition of \(T\), we say two elements in \(S\) are connected iff \(g(s_1) \sim g(s_2)\)

Exercise 1-106(2)

Given a function \(\{1 \mapsto 12, 2 \mapsto 12, 3 \mapsto 3, 4 \mapsto 4\}\) from the four element set \(S\) to the three element set \(T\)

Choose a nontrivial partition \(c \in Prt(S)\) and compute \(g_!(c) \in Prt(T)\)

Choose any coarser partition \(g_!(c)\leq d \in Prt(T)\)

Choose any non-coarser partition \(g_!(c) > e \in Prt(T)\)

Find \(g^*(d)\) and \(g^*(e)\)

Show that the adjunction formula is true, i.e. that \(c \leq g^*(d)\) (because \(g_!(c) \leq d\)) and \(g^*(e) > c\) (because \(e > g_!(c)\))

Solution(1)

\(c = \{(1, 3),(2,), (4,)\}\), \(g_!(c)\) is then \(\{(12,3),(4,)\}\)

\(d = \{(12,),(3,),(4,)\}\)

\(e = \{(12,3,4)\}\)

\(g^*(d)=\{(1,2),(3,),(4,)\}, g^*(e)=\{(1,2,3,4)\}\)

\(c \leq g^*(d)\) and \(g^*(e) > c\)

Basic theory of Galois connections(12)

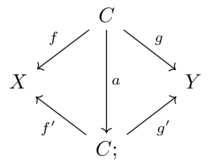

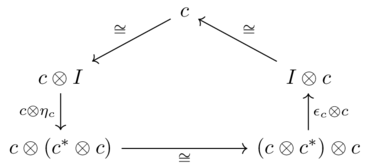

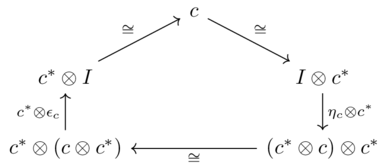

Galois connection alternate form(2)

Suppose \(P \overset{g}{\underset{f}{\leftrightarrows}} Q\) are monotone maps. The following are equivalent:

f and g form a Galois connection where f is left adjoint to g

for every \(p \in P, q \in Q\) we have:

\(p \leq g(f(p))\)

\(f(g(q)) \leq q\)

Proof(1)

Forward direction

Take any \(p \in P\) and let \(q = f(p) \in Q\)

By reflexivity, we have in \(Q\) that \(f(p) \leq q\)

By definition of Galois connection, we then have \(p \leq g(q)\), so (1) holds.

Take any \(q \in Q\) and let \(p = g(q) \in P\)

By reflexivity, we have in \(P\) that \(p \leq g(q)\)

By definition of Galois connection, we then have \(f(p) \leq q\), so (2) holds.

Reverse direction

Want to show \(f(p)\leq q \iff p \leq g(q)\)

Suppose \(f(p) \leq q\)

Since g is monotonic, \(g(f(p)) \leq g(q)\)

but, because (1), \(p \leq g(f(p))\), therefore \(p \leq g(q)\)

Suppose \(p \leq g(q)\)

Since f is monotonic, \(f(p) \leq f(g(q))\)

but, because (2), \(f(g(q)) \leq q\), therefore \(f(p) \leq q\)

Linked by

Adjoints preserving meets and joins(2)

Let \(P \overset{f}{\underset{g}{\rightleftarrows}} Q\) be monotone maps with \(f \dashv g\).

Right adjoints preserve meets

Left adjoints preserve joins

Given \(A \subseteq P\) and its image \(f(A) \subseteq Q\)

Then, if \(A\) has a join \(\vee A \in P\), then \(\vee f(a) \in Q\) exists

And \(f(\vee A) \cong \vee f(A)\)

Proof(1)

Left adjoints preserve joins

let \(j = \vee A \subseteq P\)

Given f is monotone, \(\forall a \in A: f(a) \leq f(j)\), i.e. we have \(f(a)\) as an upper bound for \(f(A)\)

To show it is a least upper bound, take some arbitrary other upper bound b for \(f(A)\) and show that \(f(j) \leq b\)

Because \(j\) is the least upper bound of \(A\), we have \(j \leq g(b)\)

Using the Galois connection, we have \(f(j) \leq b\) showing that \(f(j)\) is the least upper bound of \(f(A) \subseteq Q\).

Right adjoints preserving meets is dual to this.

Linked by

Adjoint functor theorem for preorders(2)

Adjoint functor theorem for preorders

Suppose \(Q\) is a preorder that has all meets and \(P\) is any preorder.

A monotone map \(Q \xrightarrow{g} P\) preserves meets iff it is a right adjoint.

Likewise, suppose \(P\) has all joins and \(Q\) is any preorder:

A monotone map \(P \xrightarrow{f} Q\) preserves joins iff it is a left adjoint.

Proved the reverse direction in Proposition 1.91.

Proof(1)

Given a right adjoint, construct the left adjoint by:

\(f(p) := \bigwedge\{q \in Q\ |\ p \leq g(q)\}\)

First need to show this is monotone:

If \(p \leq p'\), the relationship between the joined sets of \(f(p)\) and \(f(p')\) is that the latter is a subset of the former.

By Proposition 1.91 we infer that \(f(p) \leq f(p')\).

Then need to show that it is satisfies the left adjoint property:

Show that \(p_0 \leq g(f(p_0))\)

\(p_0 \leq \bigwedge \{g(q)\ |\ p_0 \leq g(q)\} \cong g(\bigwedge\{q\ |\ p_0 \leq g(q)\}) = g(f(p_0))\)

The first inequality comes from the fact that the meet of the set (of which \(p_0\) is a lower bound) is a greatest lower bound.

The congruence comes from the fact that right adjoints preserve meets.

Show that \(f(g(q_0)) \leq q_0\)

\(f(g(q_0)) = \bigwedge\{q\ |\ g(q_0) \leq g(q)\} \leq \bigwedge \{q_0\} = q_0\)

The first inequality comes from Proposition 1.91 since \(\{q_0\}\) is a subset of the first set.

The second equality is a property of the meet of single element sets.

Proof of a left adjoint construction (assuming it preserves joins) is dual.

Linked by

Adjoints in Set(1)

Let \(A \xrightarrow{f} B\) be a set function, say between apples and buckets into which we put each apple.

The ‘pullback along f’ \(\mathbb{P}(B) \xrightarrow{f^*} \mathbb{P}(A)\) maps each subset \(B' \subseteq B\) to its preimage \(f^{-1}(B') \subseteq A\)

It tells you for a set of buckets all the apples contained in total.

It is a monotonic map which has a left and right adjoint: \(f_! \dashv f^* \dashv f_*\)

The left adjoint \(\mathbb{P}(A)\xrightarrow{f_!}\mathbb{P}(B)\) is given by the direct image

\(f_!(A') := \{b \in B\ |\ \exists a \in A': f(a)=b\}\)

Tells you for a set of apples all the buckets that contain at least one of those apples.

The right adjoint \(\mathbb{P}(A) \xrightarrow{f_*} \mathbb{P}(B)\) is defined as follows:

\(f_*(A') := \{b \in B\ |\ \forall a: f(a)=b \implies a \in A'\}\)

Tells you all the buckets which are all-\(A\prime\) (note that empty buckets vacuously satisfy this condition).

Adjoints often turn out to have interesting semantic interpretations.

If we replace the \(\leq\) in Proposition 1.107 with \(=\), then we obtain the notion of a preorder isomorphism.

Since left adjoints preserve joins, we know a monotone map cannot have generative effects iff it is left adjoint to some other monotone.

Exercise 1-110(2)

Show that if \(P \xrightarrow{f}Q\) has a right adjoint g, then it is unique up to isomorphism. Is the same true for left adjoints?

Solution(1)

Suppose \(h\) is also right adjoint to \(f\).

What it means for \(h \cong g\):

\(\forall q \in Q: h(q) \cong g(q)\)

\(g(q) \leq h(q)\)

Substitute \(g(q)\) for \(p\) in \(p \leq h(f(p))\) (from \(h\)’s adjointness) to get \(g(q) \leq h(f(g(q)))\)

Also apply \(h\) to both sides of \(f(g(q)) \leq q\) (from \(g\)’s adjointness) to get \(h(f(g(q)))\leq h(q)\)

The result follows from transitivity.

By symmetry (nothing was specified about \(h\) or \(g\)) the proof of \(h(q)\leq g(q)\) is the same.

Same reasoning for left adjoints.

Exercise 1-118(2)

Choose sets \(X,Y\) with between two and four elements each, and choose a function \(X \xrightarrow{f} Y\) NOCARD

Choose two different subsets \(B_1, B_2 \subseteq Y\) and find \(f^*(B_1)\) and \(f^*(B_2)\)

Choose two different subsets \(A_1, A_2 \subseteq X\) and find \(f_!(A_1)\) and \(f_!(A_2)\)

With the same \(A_1, A_2\) find \(f_*(A_1)\) and \(f_*(A_2)\)

Solution(1)

\(\bar 3 \xrightarrow{f} \bar 4\) with \(\{1 \mapsto 2, 2 \mapsto 2, 3\mapsto 4\}\),\(A_1 = \{1,2\}, A_2=\{2,3\}, B_1=\{1\}, B_2=\{1,4\}\)

\(f^*(B_1)=\varnothing, f^*(B_2)=\{4\}\)

\(f_!(A_1)=\{2\},f_!(A_2)=\{2,4\}\)

\(f_*(A_1)=\{1,2,3\},f_*(A_2)=\{1,3,4\}\)

Closure operators(7)

Given a Galois connection we can compose the left and right maps to get a closure operator

Closure operator(1)

A closure operator \(P \xrightarrow{j} P\) on a preorder \(P\)

A monotone map such that for all \(p \in P\) we have:

\(p \leq j(p)\)

\(j(j(p)) \cong j(p)\)

Linked by

Eval as closure(1)

Example of a closure operator

Think of the preorder of arithmatic expressions such as \(12, 4+2+4, 9*(2+3)\), where the \(\leq\) operators denotes whether an expression can be simplified to another.

A computer program \(j\) that evaluates expressions is a monotonic function on the preorder to itself (if you can reduce x to y, then \(j(x)\) should be able to be rewritten as \(j(y)\).

The requirements of closure operator say that \(j\) should be a simplification, and that trying to reduce an expression which has already been reduced will do nothing further.

Adjunctions from closures(1)

Just as adjunctions give rise to closure operators, from every closure operator we may construct an adjunction.

Let \(P \xrightarrow{j} P\) be a closure operator.

Get a new preorder by looking at a subset of \(P\) fixed by \(j\).

\(fix_j\) defined as \(\{p \in P\ |\ j(p)\cong p\}\)

Define a left adjoint \(P \xrightarrow{j} fix_j\) and right adjoint \(fix_j \xhookrightarrow{g} P\) as simply the inclusion function.

To see that \(j \dashv g\), we need to verify \(j(p) \leq q \iff p \leq q\)

Show \(\rightarrow\):

Because \(j\) is a closure operator, \(p \leq j(p)\)

\(j(p) \leq q\) implies \(p \leq q\) by transivity.

Show \(\leftarrow\):

By monotonicity of \(j\) we have \(p \leq q\) implying \(j(p) \leq j(q)\)

\(q\) is a fix point, so the RHS is congruent to \(q\), giving \(j(p) \leq q\).

Closures in logic(1)

Examples of closure operators from logic.

Take set of all propositions as a preorder, where \(p \leq q\) iff \(p\) implies \(q\).

Some modal operators are closure operators

E.g. \(j(p)\) means “Assuming Bob is in Chicago, p”

\(p \implies j(p)\) (the logic is monotonic, adding assumptions does not make something true into something false.

\(j(j(p)) = j(p)\) (the assumption is idempotent)

Exercise 1-119(2)

Given \(f \dashv g\), prove that the composition of left and right adjoint monotone maps is a closure operator

Show \(p \leq (f;g)(p)\)

Show \((f;g;f;g)(p) \cong (f;g)(p)\)

Solution(1)

This is one of the conditions of adjoint functors: \(p \leq g(f(p))\)

The \(\leq\) direction is an extension of the above: \(p \leq g(f(p)) \leq g(f(g(f(p))))\)

Galois property: \(q \geq f(g(q))\), substitute \(f(p)\) for \(q\) to get \(f(p) \geq f(g(f(p)))\).

Because \(g\) is a monotone map, we can apply it to both sides to get \(g(f(p)) \geq g(f(g(f(p))))\)

Level shifting(3)

Preorder of relations(1)

‘Level shifting’

Given any set \(S\), there is a set \(\mathbf{Rel}(S)\) of binary relations on \(S\) (i.e. \(\mathbb{P}(S \times S)\))

This power set can be given a preorder structure using the subset relation.

A subset of possible relations satisfy the axioms of preorder relations. \(\mathbf{Pos}(S) \subseteq \mathbb{P}(S \times S)\) which again inherits a preorder structure from the subset relation

A preorder on the possible preorder structures of \(S\), that’s a level shift.

The inclusion map \(\mathbf{Pos}(S) \hookrightarrow \mathbf{Rel}(S)\) is a right adjoint to a Galois connection, while its left adjoint is \(\mathbf{Rel}(S)\overset{Cl}{\twoheadrightarrow} \mathbf{Pos}(S)\) which takes the reflexive and transitive closure of an arbitrary relation.

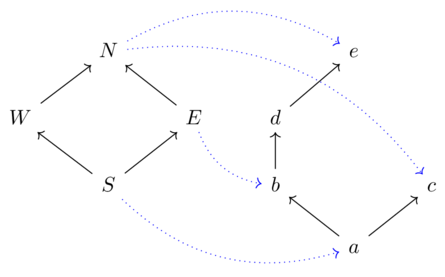

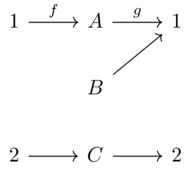

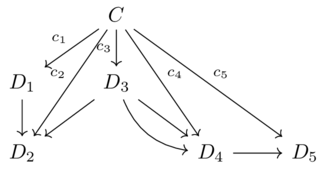

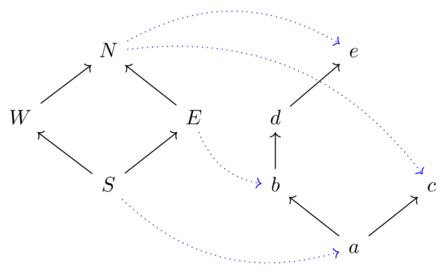

Exercise 1-125(2)

Let \(S=\{1,2,3\}\)

Come up with any preorder relation on \(S\), and define \(U(\leq):=\{(s_1,s_2)\ |\ s_1 \leq s_2\}\) (the relation ‘underlying’ the preorder. Note \(\mathbf{Pos}(S) \xhookrightarrow{U} \mathbf{Rel}(S)\))

Pick binary relations such that \(Q \subseteq U(\leq)\) and \(Q' \not \subseteq U(\leq)\)

We want to check that the reflexive/transitive closure operation \(Cl\) is really left adjoint to the underlying relation \(U\).

The meaning of \(Cl \dashv U\) is \(Cl(R) \sqsubset \leq \iff R \sqsubset U(\leq)\), where \(\sqsubset\) is the order relation on \(\mathbf{Pos}(S)\)

Concretely show that \(Cl(Q) \sqsubset \leq\)

Concretely show that \(Cl(Q') \not \sqsubset \leq\)

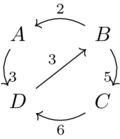

Solution(1)

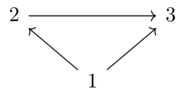

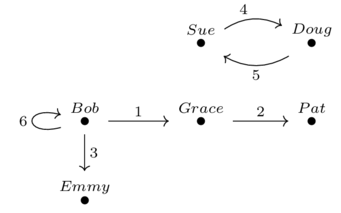

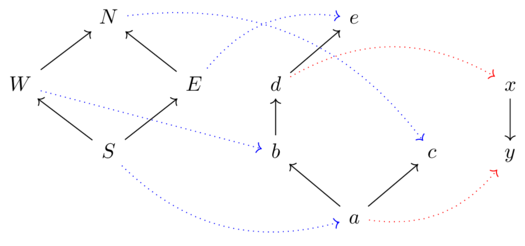

Let the preorder be given by this diagram (with implicit reflexive arrows):

Let \(Q\) be given by the following diagram

and let \(Q'=S\times S\)

\(Cl(Q) = \{11,12,22,33\}\) \(\sqsubset\) \(\leq = \{11,22,33,12,23,13\}\)

\(Cl(Q') = Q' = S \times S\) \(\not \sqsubset\) \(\leq\) (reason: \((3,1) \in S \times S\) but \((3,1) \not \in \leq\))

Chapter 2: Resource theories(112)

Getting from A to B(1)

Want to be able to answer questions about resources like:

Given what I have, is it possible to get what I want?

Given what I have, what is the minimum cost to get what I want?

Given what I have, what is the set of ways to get what I want?

Monoidal preorders will allow us to build new recipes from old ones.

Resources are not always consumed when used.

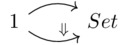

A \(\mathcal{V}\) category is a set of objects, which one may think of as points on a map

\(\mathcal{V}\) somehow ’structures the question’ of getting from point a to b

\(\mathcal{V} = Bool\) answers the question of whether we can get to b

\(\mathcal{V} = Cost\) can help answer how to get to b with minimum cost.

\(\mathcal{V} = \mathbf{Set}\) can yield a set of methods to get to b.

Symmetric monoidal preorders(40)

Definition and first examples(9)

The notation for monoidal product and unit may vary depending on context. \(I, \otimes\) are defaults but it may be best to use \((0,+),(1,*),(true, \land)\) (etc.)

Symmetric monoidal structure on a preorder(1)

A symmetric monoidal structure on a preorder \((X, \leq)\)

Two additional constituents:

A monoidal unit \(I \in X\)

A monoidal product \(X \times X \xrightarrow{\otimes} X\)

Satisfying the following properties:

Monotonicity: \(\forall x_1,x_2,y_1,y_2 \in X: x_1 \leq y_1 \land x_2 \leq y_2 \implies x_1 \otimes x_2 \leq y_1 \otimes y_2\)

Unitality: \(\forall x \in X: I \otimes x = x = x \otimes I\)

Associativity: \(\forall x,y,z \in X: (x \otimes y) \otimes z = x \otimes (y\otimes z)\)

Symmetry: \(\forall x,y \in X: x \otimes y = y \otimes x\)

Linked by

- Weak monoidal structure on a preorder: referenced

- Discrete SMP: referenced

- Exercise 2-5: referenced

- Exercise 2-8: referenced

- Introducing wiring diagrams: referenced

- Exercise 2-20: referenced

- Abstract examples: referenced

- Opposite SMP: referenced

- Natural numbers SMP: referenced

- Natural numbers SMP: referenced

- Divisibility SMP: referenced

- Divisibility SMP: referenced

- Cost SMP: referenced

- Exercise 2-31: referenced

- Exercise 2-33: referenced

- Exercise 2-34: referenced

- Exercise 2-34: referenced

- Exercise 2-35: referenced

- Exercise 2-45: referenced

- Exercise 2-45: referenced

- V-category: referenced

- Lawvere metric space: referenced

- Exercise 2-55: referenced

- Exercise 2-61: referenced

- Exercise 2-62: referenced

- Exercise 2-63: referenced

- Closed SMP: referenced

- Cost is closed: referenced

- Chemistry closed resource theory: referenced

- Exercise 2-82: referenced

- Exercise 2-82: referenced

- Solution: referenced

- Quantale is SMP with all joins: referenced

- Exercise 2-94: referenced

- Proof: referenced

- Proof: referenced

- Proof: referenced

- Proof: referenced

- Monoidal categories: referenced

- Monoidal categories: referenced

Weak monoidal structure on a preorder(1)

A weak monoidal structure on a preorder \((X, \leq)\)

Definition is identical to a symmetric monoidal structure, replacing all \(=\) signs with \(\cong\) signs.

Discrete SMP(1)

A monoid is a set \(M\) with a monoid unit \(e \in M\) and associative monoid multiplication \(M \times M \xrightarrow{\star} M\) such that \(m \star e=m=e \star m\)

Every set \(S\) determines a discrete preorder: \(\mathbf{Disc}_S\)

It is easy to check if \((M,e,\star)\) is a commutative monoid then \((\mathbf{Disc}_M, =, e, \star)\) is a symmetric monoidal preorder.

Linked by

Poker hands(1)

Let \(H\) be the set of all poker hands, ordered by \(h \leq h'\) if \(h'\) beats or ties hand \(h\).

One can propose a monoidal product by assigning \(h_1 \otimes h_2\) to be “the best hand one can form out of the ten cards in \(h_1 \bigcup h_2\)"

This proposal will fail monotonicity with the following example:

\(h_1 := \{2\heartsuit, 3\heartsuit,10 \spadesuit,J\spadesuit,Q\spadesuit\} \leq i_1 := \{4\spadesuit,4\spadesuit,6\heartsuit,6\diamondsuit,10\diamondsuit\}\)

\(h_2 := \{2\diamondsuit,3\diamondsuit,4\diamondsuit,K\spadesuit,A\spadesuit\} \leq i_2 := \{5\spadesuit,5\heartsuit,7\heartsuit,J\diamondsuit,Q\diamondsuit\}\)

\(h_1 \otimes h_2=\{10\spadesuit,J\spadesuit,Q\spadesuit,K\spadesuit,A\spadesuit\} \not \leq i_2 \otimes i_2 = \{5\spadesuit, 5\heartsuit,6\heartsuit,6\diamondsuit,Q\diamondsuit\}\)

Exercise 2-5(2)

Consider the reals ordered by our normal \(\leq\) relation. Do \((1,*)\) as unit and product for a symmetric monoidal structure?

Solution(1)

No, monotonicity fails: \(x_1\leq y_1 \land x_2 \leq y_2 \not \implies x_1x_2 \leq y_1y_2\) (Counterexample: \(x_1=x_2=-1, y_1=y_2=0\))

Exercise 2-8(2)

Check if \((M,e,\star)\) is a commutative monoid then \((\mathbf{Disc}_M, =, e, \star)\) is a symmetric monoidal preorder, as described in this example.

Solution(1)

Monotonicity is the only tricky one, and is addressed due to the triviality of the discrete preorder.

We can replace \(x \leq y\) with \(x \leq x\) because it is a discrete preorder.

\(x_1 \leq x_1 \land x_2 \leq x_2 \implies x_1 \otimes x_2 \leq x_1 \otimes x_2\)

\(True \land True \implies True\) is vacuously true due to reflexivity of preorder.

Unitality/associativity comes from unitality/associativity of monoid

Symmetry comes from commutitivity of monoid.

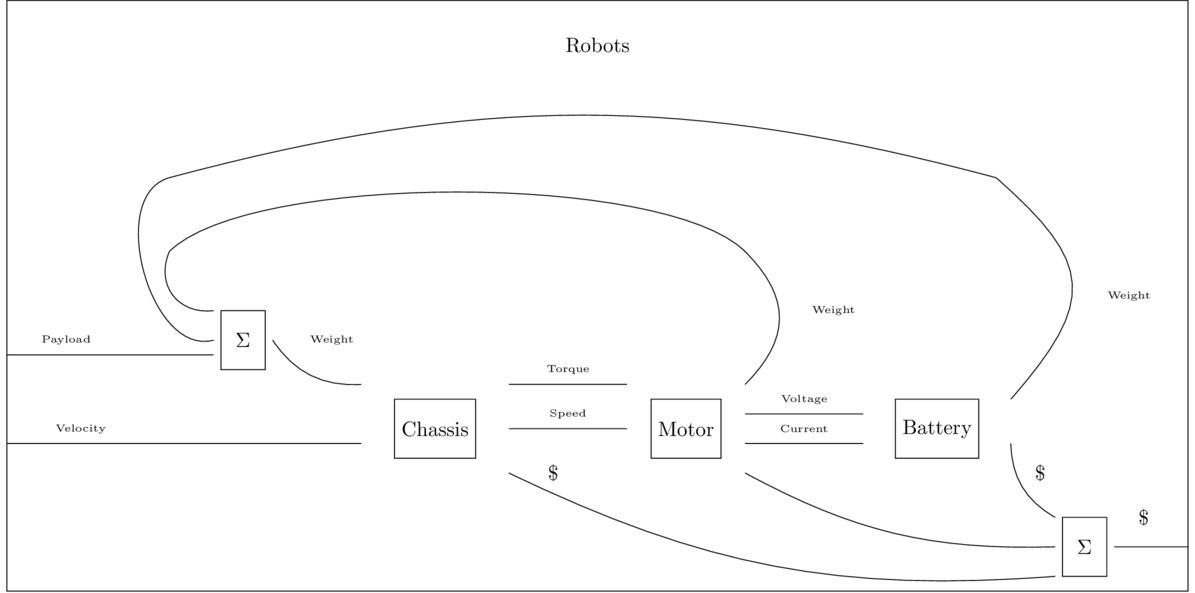

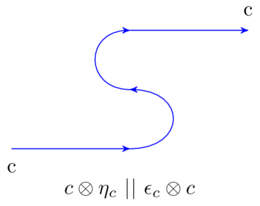

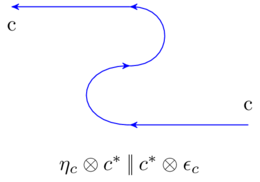

Introducing wiring diagrams(3)

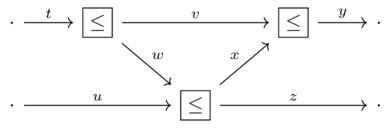

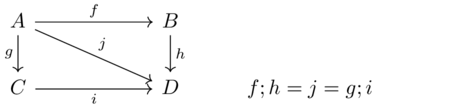

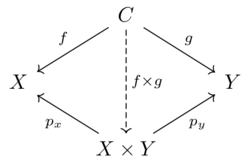

Exercise 2-20(2)

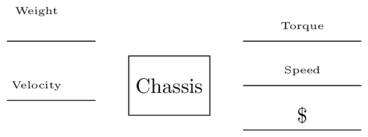

Given the assertions the interior of this wiring diagram:

Prove that the conclusion follows using the rules of symmetric monoidal preorders

Make sure to use reflexivity and transitivity

How do you know that symmetry axiom does not need to be invoked?

Solution(1)

Assertions:

\(t \leq v+w\)

\(w+u \leq x+z\)

\(v+x \leq y\)

Conclusion: \(t+u \leq y+z\)

Proof:

\((t)+(u) \leq (v+w)+(u)\) - from monotonicity and reflexivity of u

\(= v+(w+u)\) - associativity

\(\leq v+(x+z)\) - monotonicity and reflexivity of v

\(= (v+x)+z\) - associativity

\(\leq y+z\) - monotonicity and reflexivity of z

Symmetry was not needed because the diagram had no crossing wires.

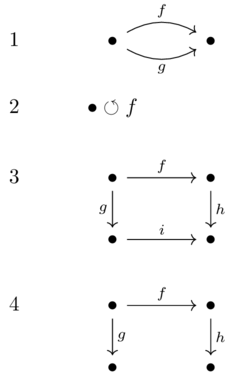

Visual representations for building new relationships from old.

For a preorder without a monoidal structure, we can only chain relationships linearly (due to transitivity).

For a symmetric monoidal structure, we can combine relationships in series and in parallel.

Call boxes and wires icons

Any element \(x \in X\) can be a label for a wire. Given x and y, we can write them as two wires in parallel or one wire \(x \otimes y\); these are two ways of representing the same thing.

Consider a wire labeled \(I\) to be equivalent to the absence of a wire.

Given a \(\leq\) block, we say a wiring diagram is valid if the monoidal product of elements on the left is less than those on the right.

Let’s consider the properties of the order structure:

Reflexivity: a diagram consisting of just one wire is always valid.

Transitivity: diagrams can be connected together if outputs = inputs

Monotonicity: Stacking two valid boxes in parallel is still valid.

Unitality: We need not worry about \(I\) or blank space

Associativity: Need not worry about building diagrams from top-to-bottom or vice-versa.

Symmetry: A diaagram is valid even if its wires cross.

One may regard crossing wires as another icon in the iconography.

Wiring diagrams can be thought of as graphical proofs

If subdiagrams are true, then the outer diagram is true.

Applied examples(1)

Resource theories:

As discussed here, we consider ’static’ notions.

Chemistry: reactants + catalyst \(<\) products + catalyst

Manufacturing: you can trash anything you want, and it disappears from view (requires \(\forall x: x \leq I\))

Informatics: in addition to trashing, information can be copied (requires \(x \leq x + x\))

Abstract examples(18)

Booleans important for the notion of enrichment.

Enriching in a symmetric monoidal preorder \(\mathcal{V}=(V,\leq,I,\otimes)\) means "letting \(\mathcal{V}\) structure the question of getting from a to b"

Consider \(\mathbf{Bool}=(\mathbb{B},\leq,true,\land)\)

The fact that the underlying set is \(\{false, true\}\) means that “getting from a to b is a true/false question"

The fact that \(true\) is the monoidal unit translates to the saying “you can always get from a to a"

The fact that \(\land\) is the moniodal product means “if you can get from a to b and b to c then you can get from a to c"

The ‘if ... then ...’ form of the previous sentence is coming from the order relation \(\leq\).

Opposite SMP(2)

The opposite of a symmetric monoidal preorder \((X, \geq, I, \otimes)\) is still a symmetric monoidal preorder

Proof(1)

Monotonicity: \(x_1 \geq y_1 \land x_2 \geq y_2 \implies x_1 \otimes x_2 \geq y_1 \otimes y_2\)

This holds because monotonicity holds in the original preorder (\(a\geq b \equiv b \leq a\)).

Unitality, symmetry, and associativity are not affected by the preorder.

Natural numbers SMP(1)

There is a symmetric monoidal structure on \((\mathbb{N}, \leq)\) where the monoidal unit is zero and the product is \(+\) (\(6+4=10\)). Monotonicity (\((x_1,x_2)\leq(y_1,y_2) \implies x_1+x_2 \leq y_1+y_2\)) and other conditions hold.

Divisibility SMP(1)

Recall the divisibility order \((\mathbb{N}, |)\)

There is a symmetric monoidal structure on this preorder with unit \(1\) and product \(*\).

Monotonicity (\(x_1|y_1 \land x_2|y_2 \implies x_1*x_2 | y_1*y_2\)) and other conditions hold.

Cost SMP(1)

Lawvere’s symmetric monoidal preorder, Cost.

Let \([0,\infty]\) represent the non-negative real numbers with infinity. Also take the usual notion of \(\geq\).

There is a monoidal structure for this preorder: \(\mathbf{Cost}:=([0,\infty],\geq,0,+)\)

The monoidal unit being zero means “you can get from a to a at no cost."

The product being + means “getting from a to c is at most the cost of a to b plus b to c"

The ‘at most’ above comes from the \(\geq\).

Linked by

Exercise 2-31(2)

Show there is a symmetric monoidal structure on \((\mathbb{N}, \leq)\) where the monoidal product is \(6*4=24\). What should the monoidal unit be?

Solution(1)

Let the monoidal product be the standard product for integers, with 1 as unit.

Monotonicity: \((x_1,x_2)\leq (y_1,y_2) \implies x_1x_2 \leq y_1y_2\)

Unitality: \(1*x_1=x_1=x_1*1\)

Associativity: \(a*(b*c)=(a*b)*c\)

Symmetry: \(a*b=b*a\)

Exercise 2-33(2)

Recall the divisibility order \((\mathbb{N}, |)\). Someone proposes \((0,+)\) as the monoidal unit and product. Does this satisfy the conditions of a symmetric monoidal structure?

Solution(1)

Conditions 2-4 are satisfied, but not monotonicity: \(1|1 \land 2|4\) but not \(3 | 5\)

Exercise 2-34(2)

Consider the preorder defined by the Hasse diagram \(\boxed{no \rightarrow maybe \rightarrow yes}\)

Consider a potential monoidal structure with \(yes\) as the unit and \(min\) as the product.

Fill out a reasonable definition of \(min\) and check that it satisfies the conditions.

Solution(1)

| \(min\) | no | maybe | yes |

|---|---|---|---|

| no | no | no | no |

| maybe | no | maybe | maybe |

| yes | no | maybe | yes |

Monotonicity: \(x_1 \leq y_1 \land x_2 \leq y_2 \implies x_1x_2 \leq y_1y_2\)

Suppose without loss of generality that \(x_1\leq x_2\)

then \(x_1x_2=x_1\) and \(y_1y_2 = y_1\) or \(y_2\)

In the first case, we know this is true because we assumed \(x_1 \leq y_1\)

In the second case, we know it from transitivity: \(x_1 \leq x_2\leq y_2\)

Unitality: \(min(x,yes)=x\)

Associativity: probably

Symmetry: table is symmetric.

Linked by

Exercise 2-35(2)

Check that there is a symmetric monoidal structure on the power set of \(S\) ordered by subset relation. (The unit is \(S\) and product is \(\cap\))

Solution(1)

Monotonicity: \(x_1 \subseteq y_1 \land x_2 \subseteq y_2 \implies x_1 \cap x_2 \subseteq y_1 \cap y_2\)

This is true: if something is in both \(x_1,x_2\), then it is in both \(y_1,y_2\), i.e. in their intersection.

Unitality: \(x \cap S = x = S \cap x\), since \(\forall x \in P(S): x \subseteq S\).

Associativity and symmetry come from associativity and symmetry of \(\cap\) operator.

Exercise 2-36(2)

Let \(Prop^\mathbb{N}\) be the set of all mathematical statements one can make about a natural number.

Examples:

n is a prime

n = 2

\(n \geq 11\)

We say \(P \leq Q\) if for all \(n \in \mathbb{N}\), \(P(n) \implies Q(n)\)

Define a monoidal unit and product on \((Prop^\mathbb{N}, \leq)\).

Solution(1)

Let the unit be \(\lambda x. true\) and product be \(\land\)

montonicity: \(P_1(x)\leq Q_1(x) \land P_2(x) \leq Q_2(x) \implies (P_1 \land P_2)(x) \leq (Q_1 \land Q_2)(x)\)

If the \(P\) properties hold for a given number, then each of the \(Q\) properties hold

unitality, associativity, symmetry: same as \(\mathbf{Bool}\)

Exercise 2-40(2)

Consider \(\mathbf{Cost}^{op}\). What is it as a preorder? What is its unit and product?

Solution(1)

As a preorder, the domain is still \([0,\infty]\) and ordered by the natural \(\leq\) relation. The unit and product are unchanged by taking the opposite preorder, so they are still \(0, +\) respectively.

Monoidal monotone maps(9)

Monoidal montones are examples of monoidal functors, specifically lax ones. Oplax functors are a dual notion which has the inequalities reversed.

Monoidal monotone(1)

A monoidal monotone map from \((P,\leq_P,I_P,\otimes_P)\) to \((Q, \leq_Q,I_Q,\otimes_Q)\). Also, a strong monoidal monotone map and a strict monoidal monotone map

A monotone map \((P,\leq_P) \xrightarrow{f} (Q,\leq_Q)\) satsifying two conditions:

\(I_Q \leq_Q f(I_P)\)

\(\forall p_1,p_2 \in P:\) \(f(p_1)\ \otimes_Q\ f(p_2)\ \leq_Q\ f(p_1\ \otimes_P\ p_2)\)

If the \(\leq\) are replaced with \(\cong\), the map is strong, and if replaced with \(=\), it is strict.

Linked by

- Natural Real monoidal monotones: referenced

- Exercise 2-43: referenced

- Exercise 2-43: referenced

- Solution: referenced

- Exercise 2-44: referenced

- Exercise 2-45: referenced

- Solution: referenced

- Induced V-categories from monoidal monotones: referenced

- Proof: referenced

- Proof: referenced

- Metric space to preorder: referenced

- Exercise 2-67: referenced

- Exercise 2-68: referenced

- Solution: referenced

Natural Real monoidal monotones(1)

Strict monoidal monotone injection \((\mathbb{N},\leq, 0, +) \xhookrightarrow{i} (\mathbb{R},\leq,0,+)\)

There is also a monoidal monotone \((\mathbb{R}^+,\leq, 0, +) \xrightarrow{\lfloor x \rfloor} (\mathbb{N},\leq,0,+)\)

Monotonic: \(x \leq_\mathbb{R} y \implies \lfloor x \rfloor \leq \lfloor y \rfloor\)

But it is neither strict nor strong: \(\lfloor 0.5 \rfloor + \lfloor 0.5 \rfloor \not \cong \lfloor 0.5+0.5 \rfloor\)

Exercise 2-43(2)

Consider a proposed monoidal monotone \(\mathbf{Bool}\xrightarrow{g}\mathbf{Cost}\) with \(g(false)=\infty, g(true)=0\)

Check that the map is monotonic and that it satisfies both properties of monoidal monotones.

Is g strict?

Solution(1)

It is monotonic: \(\forall a,b \in \mathbb{B}: a\leq b \implies g(a)\leq g(b)\)

there is only one nonidentity case in \(\mathbf{Bool}\) to cover, and it is true that \(\infty\ \leq_\mathbf{Cost}\ 0\).

Condition on units: \(0 \leq_\mathbf{Cost} g(true)\) (actually, it is equal)

In \(\mathbf{Cost}\): \(g(x) + g(y) \geq g(x \land y)\)

if both true/false, the equality condition holds.

If one is true and one is false, then LHS and RHS are \(\infty\) (as \(x \land y = False\)).

Therefore this is a strict monoidal monotone.

Exercise 2-44(2)

Consider the following functions \(\mathbf{Cost} \xrightarrow{d,u} \mathbf{Bool}\)

\(d(x>0)\mapsto false,\ d(0)\mapsto true\)

\(u(x=\infty)\mapsto false,\ d(x < \infty) \mapsto true\)

For both of these, answer:

Is it monotonic?

If so, does it satsify the monoidal monotone properties?

If so, is it strict?

Solution(1)

The function \(d\) asks “Is it zero?”, and the function \(u\) asks “Is it finite?”.

Both of these questions are monotone: as we traverse forward in \(\leq_{Cost}\), we traverse towards being zero or being finite.

The first monoidal monotone axiom is satisifed in both because the unit (\(0\)) is mapped to the unit (\(True\)).

The second monoidal monotone axiom holds for both because addition preserves both things being zero (or not) and both things being finite (or not).

These are strict because, in \(Bool\), equality and congruence coincide.

Linked by

Exercise 2-45(2)

Is \((\mathbb{N},\leq,1,*)\) a symmetric monoidal preorder?

If so, does there exist a monoidal monotone \((\mathbb{N},\leq,0,+) \rightarrow (\mathbb{N},\leq,1,*)\)

Is \((\mathbb{Z},\leq,*,1)\) a symmetric monoidal preorder?

Solution(1)

Yes. Monotonicity holds, and multiplication by 1 is unital. The operator is symmetric and associative.

Exponentiation (say, by \(2\)) is a strict monoidal monotone.

\(1 = 2^0\) and \(2^x * 2^y = 2^{x+y}\)

No: monotonicity does not hold (multiplying negative numbers gives a larger number).

Enrichment(20)

V-categories(2)

V-category(1)

A \(\mathcal{V}\) category, given a symmetric monoidal preorder \(\mathcal{V}=(V,\leq,I,\otimes)\)

To specify the category \(\mathcal{X}\), one specifies:

A set \(Ob(\mathcal{X})\) whose elements are called objects

A hom-object for every pair of objects in \(Ob(\mathcal{X})\), written \(\mathcal{X}(x,y) \in V\)

The following properties must be satisfied:

\(\forall x \in Ob(\mathcal{X}):\) \(I \leq \mathcal{X}(x,x)\)

\(\forall x,y,z \in Ob(\mathcal{X}):\) \(\mathcal{X}(x,y)\otimes\mathcal{X}(y,z) \leq \mathcal{X}(x,z)\)

We call \(\mathcal{V}\) the base of enrichment for \(\mathcal{X}\) or say that \(\mathcal{X}\) is enriched in \(\mathcal{V}\).

Linked by

- Getting from A to B: referenced

- Bool-category: referenced

- Bool-category: referenced

- Preorders are Bool-categories: referenced

- Proof: referenced

- Proof: referenced

- Proof: referenced

- Lawvere metric space: referenced

- Lawvere metric space: referenced

- Lawvere metric space: referenced

- Exercise 2-55: referenced

- Exercise 2-61: referenced

- Exercise 2-62: referenced

- Exercise 2-62: referenced

- Exercise 2-62: referenced

- Exercise 2-63: referenced

- Induced V-categories from monoidal monotones: referenced

- Induced V-categories from monoidal monotones: referenced

- Proof: referenced

- Proof: referenced

- Proof: referenced

- V-functor: referenced

- Bool-functors: referenced

- Exercise 2-73: referenced

- Exercise 2-73: referenced

- Exercise 2-73: referenced

- Exercise 2-73: referenced

- Solution: referenced

- Solution: referenced

- Solution: referenced

- V-category product: referenced

- V-category product: referenced

- Cost-category product: referenced

- Cost-category product: referenced

- Exercise 2-75: referenced

- Exercise 2-78: referenced

- Self-enriched category: referenced

- Self-enriched category: referenced

- Self-enriched category: referenced

- Feasibility relationships as Bool-profunctors: referenced

- V-profunctors: referenced

- V-profunctor: referenced

- Bool-profunctors: referenced

- The unit profunctor: referenced

- Proof: referenced

- Proof: referenced

- Exercise 4-26: referenced

- V-profunctor collage: referenced

- Monoidal categories: referenced

- Monoidal categories: referenced

- Categories enriched in a symmetric monoidal category: referenced

Bool-category(1)

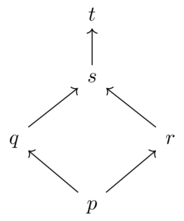

Consider the following preorder:

As a Bool-category, the objects are \(Ob(\mathcal{X})=\{p,q,r,s,t\}\).

For every pair, we need an element of Bool, so make it true if \(x\leq y\)

\(true\) is the monoidal unit of Bool, and this obeys the two constraints of a \(\mathcal{V}\) category.

We can represent the binary relation (hom-object) with a table:

\(\leq\) p q r s t p T T T T T q F T F T T r F F T T T s F F F T T t F F F F T

Preorders as Bool-categories(2)

Preorders are Bool-categories(2)

There is a one-to-one correspondence between preorders and Bool-categories

Proof(1)

Construct preorder \((X,\leq_X)\) from any Bool-category \(\mathcal{X}\)

Let \(X\) be \(Ob(\mathcal{X})\) and \(x\ \leq_X\ y\) be defined as \(\mathcal{X}(x,y)=true\)

This is reflexive and transitive because of the two constraints we put on enriched categories.

The first constraint says that \(true \leq (x \leq_X x)\)

The second constraint says \((x \leq_X y) \land (y \leq_X z) \leq (x \leq_X z)\)

Construct Bool-category from a preorder:

Let \(Ob(X)=X\) and define \(\mathcal{X}(x,y)=true\) iff \(x \leq_X y\)

The two constraints on preorders give us our two required constraints on enriched categories.

Lawvere metric spaces(10)

Can convert any weighted graph into a Lawvere metric space, where a the distance is the sum of weights along the shortest path.

It can be hard to see the shortest path by inspection, but a matrix power iteration method (starting from just a matrix of edge weights) is possible.

Metric space(1)

A metric space \((X,d)\)

A set \(X\) whose elements are called points

A function \(X \times X \xrightarrow{d} \mathbb{R}_{\geq 0}\) which gives the distance between two points.

These must satisfy three properties:

\(d(x,y)=0 \iff x=y\)

\(d(x,y)=d(y,x)\)

\(d(x,y)+d(y,z)\geq d(x,z)\) (triangle inequality)

An extended metric space includes \(\infty\) in the codomain of the cost function.

Linked by

Lawvere metric space(1)

A Lawvere metric space

A Cost-category, i.e. a category enriched in the symmetric monoidal preorder \(\mathbf{Cost}=([0,\infty],\geq,0,+)\).

\(X\) is given as \(Ob(\mathcal{X})\)

\(d(x,y)\) is given as \(\mathcal{X}(x,y)\)

The axiomatic properties of a category enriched in Cost are:

\(0 \geq d(x,x)\)

\(d(x,y)+d(y,z) \geq d(x,z)\)

Linked by

Reals metric space(1)

The set \(\mathbb{R}\) can be given a metric space structure, with \(d(x,y)=|x-y|\).

Exercise 2-52(2)

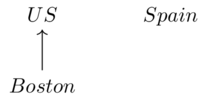

Imagine the points of a metric space are whole regions, like US, Spain, and Boston. Distance is "Given the worst case scenario, how far do you have to travel to get from region A to B?"

This actually breaks our symmetry requirement: \(d(Boston,US)=0, d(US,Boston) > 0\)

Which distance is bigger in this framework: \(d(Spain,US)\) or \(d(US,Spain)\)?

Solution(1)

\(d(US,Spain)\) is bigger because there is much more room for the worst case scenario to place one farther for Spain.

A bigger first argument makes things strictly worse, all else equal. A bigger second argument makes things strictly better, all else equal.

Linked by

Exercise 2-55(2)

Consider the symmetric monoidal preorder \((\mathbb{R},\geq,0,+)\) which is the same as Cost but does not include \(\infty\). How do you characterize the difference between this and a Lawvere metric space in the sense of definition 2.46?

Solution(1)

It is a metric space in which points may only be finitely-far apart.

Exercise 2-60(2)

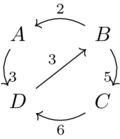

What is the distance matrix represented by this graph?

Solution(1)

| \(\rightarrow\) | A | B | C | D |

|---|---|---|---|---|

| A | 0 | 6 | 3 | 11 |

| B | 2 | 0 | 5 | 5 |

| C | 5 | 3 | 0 | 8 |

| D | 11 | 9 | 6 | 0 |

Linked by

V-variations on preorders and metric spaces(6)

Exercise 2-61(2)

Recall the symmetric monoidal preorder \(\mathbf{NMY} := (P,\leq, yes, min)\) from Exercise 2.34. Interpret what a NMY-category is.

Solution(1)

It is like a graph where some edges are ‘maybe’ edges. We can ask the question “Is there a path from a to b?" and if there is a true path we will get a ‘yes’. If the only paths are those which include maybe edges, then we get a ’maybe’. If there’s no path at all, we get a ‘no’.

Exercise 2-62(2)

Let \(M\) be a set and \(\mathcal{M}:=(P(M),\subseteq, M, \cap)\) be the symmetric monoidal preorder whose elements are subsets of \(M\).

Someone says "for any set \(M\), imagine it as the set of modes of transportation (e.g. car, boat, foot)". Then an \(\mathcal{M}\) category \(\mathcal{X}\) tells you all the modes that will get you from a all the way to b, for any two points \(a,b \in Ob(\mathcal{X})\)

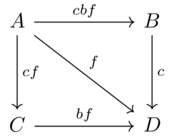

Draw a graph with four vertices and five edges, labeled with a subset of \(M=\{car,boat,foot\}\)

From this graph it is possible to construct an \(\mathcal{M}\) category where the hom-object from x to y is the union of the sets for each path from x to y, where the set of a path is the intersection of the sets along the path. Write out the corresponding 4x4 matrix of hom-objects and convince yourself this is indeed an \(\mathcal{M}\) category.

Does the person’s interpretation look right?

Solution(1)

(implicitly, no path means edge of \(\varnothing\) and self paths are \(cfb\))

Hom objects:

A B C D A cbf cbf cf cf B \(\varnothing\) cbf \(\varnothing\) c C \(\varnothing\) \(\varnothing\) cbf bf D \(\varnothing\) \(\varnothing\) \(\varnothing\) cbf The first property (\(\forall x \in Ob(\mathcal{X}): I \leq \mathcal{X}(x,x)\)) is satisfied by noting the diagonal entries are equal to the unit.

The second property (\(\forall x,y,z \in Ob(\mathcal{X}): \mathcal{X}(x,y)\otimes\mathcal{X}(y,z) \leq \mathcal{X}(x,z)\)) can be checked looking at the following cases:

\(A \rightarrow B \rightarrow D\): \(cbf \cap c \leq cf\)

\(A \rightarrow C \rightarrow D\): \(cf \cap bf \leq cf\)

One subtlety is that we need to say that one can get from any place to itself by any means of transportation for this to make sense. Overall interpretation looks good.

Exercise 2-63(2)

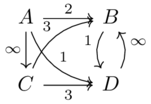

Consider the symmetric monoidal preorder \(\mathcal{W}:=(\mathbb{N}\cup\{\infty\},\leq,\infty,min)\)

Draw a small graph labeled by elements of \(\mathbb{N}\cup\{\infty\}\)

Write a the matrix whose rows and columns are indexed by nodes in the graph, whose (x,y)th entry is given by the maximum over all paths p from x to y of the minimum edge label in p.

Prove that this matrix is a matrix of hom-objects for a \(\mathcal{W}\) category called \(\mathcal{X}\).

Make up an interpretation for what it means to enrich in \(\mathcal{W}\)

Solution(1)

(implicitly, no path means path of weight 0, and self paths have weight \(\infty\))

Maxmin matrix:

A B C D A \(\infty\) 3 \(\infty\) 3 B 0 \(\infty\) 0 \(\infty\) C 0 3 \(\infty\) 3 D 0 1 0 \(\infty\) Self paths are equal to the monoidal unit and it will never be the case that \(min(\mathcal{X}(A,B),\mathcal{X}(B,C)) > \mathcal{X}(A,C)\) because even in the worst-case scenario (where there is not a better path from A to C that ignores B completely), we form the best path by combining the best path from A to B with the best from B to C. We are forced to take the minimum edge label in the path, which means that the lowest \(\mathcal{X}(A,C)\) can be is actually equal to the left hand side.

The edges could represent constraints (\(\infty\) is fully unconstrained, \(0\) is fully constrained, e.g. the diameter of a pipe) and the hom-object represents the least-constrained thing that can get from one point to another. The monoidal unit says that something can be fully unconstrained if it stays where it is, and the monoidal product (min) says how to compose two different constraints in series.

Constructions on V-categories(18)

Changing the base of enrichment(7)

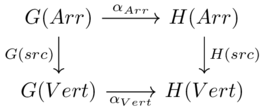

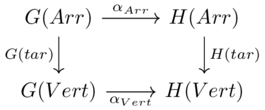

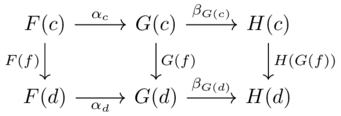

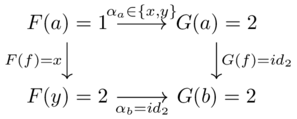

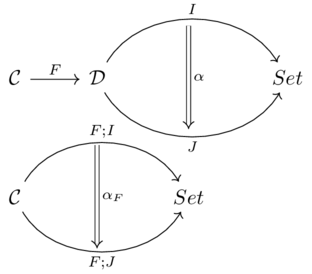

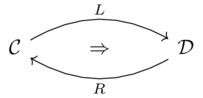

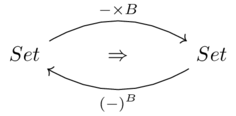

Induced V-categories from monoidal monotones(2)

Let \(\mathcal{V}\xrightarrow{f}\mathcal{W}\) be a monoidal monotone map. Given a \(\mathcal{V}\) category, called \(\mathcal{C}\), one can construct an associated \(\mathcal{W}\) category, let’s call it \(\mathcal{C}_f\)

Proof(1)

Take the same objects: \(Ob(\mathcal{C}_f):=Ob(\mathcal{C})\)

\(\mathcal{C}_f(a,b) := f(\mathcal{C}(a,b))\)

Check this obeys the definition of an enriched category:

Condition on the monoidal unit:

\(I_W \leq f(I_V)\) — from the first condition of a monoidal monotone map.

\(\forall c \in Ob(\mathcal{C}): I_V \leq \mathcal{C}(c,c)\) — first condition of an enriched category, which \(\mathcal{C}\) is

\(\forall c \in Ob(\mathcal{C}):f(I_V) \leq f(\mathcal{C}(c,c))\) — monotone map property

\(\forall c \in Ob(\mathcal{C}):f(I_V) \leq \mathcal{C}_f(c,c)\) — definition of \(\mathcal{C}_f\)

\(\forall c \in Ob(C_f): I_W \leq C_f(c,c)\) — transitivity, using 1 and 4, noting \(Ob(\mathcal{C})=Ob(\mathcal{C}_f)\)

Condition on monoidal product:

\(\mathcal{C}_f(c,d) \otimes_W \mathcal{C}_f(d,e) = f(\mathcal{C}(c,d)) \otimes_W f(\mathcal{C}(d,e))\) — definition of \(\mathcal{C}_f\)

\(f(\mathcal{C}(c,d)) \otimes_W f(\mathcal{C}(d,e)) \leq f(\mathcal{C}(c,d) \otimes_V \mathcal{C}(d,e))\) — second condition of a monoidal monotone map

\(\mathcal{C}(c,d) \otimes_V \mathcal{C}(d,e) \leq \mathcal{C}(c,e)\) — Second condition of an enriched category

\(f(\mathcal{C}(c,d) \otimes_V \mathcal{C}(d,e)) \leq f(\mathcal{C}(c,e)\) — monotone map property

\(f(\mathcal{C}(c,d) \otimes_V \mathcal{C}(d,e)) \leq \mathcal{C}_f(c,e)\) — definition of \(\mathcal{C}_f\)

\(\mathcal{C}_f(c,d) \otimes_W \mathcal{C}_f(d,e) \leq \mathcal{C}_f(c,e)\) — transitivity, 1,2 and 5

Linked by

Metric space to preorder(1)

Consider the function \([0,\infty] \xrightarrow{f} \mathbb{B}\) which maps 0 to true and otherwise to false.

Can check that f is monotonic and preserves the monoidal product+unit, so it is a monoidal monotone. (this was shown in Exercise 2.44)

Thus we have a tool to convert metric spaces into preorders.

Linked by

Exercise 2-67(2)

Recall the “regions of the world” Hausdorff metric space We learned that a metric space can be converted into a preorder by a particular monoidal monotone map. How would you interpret the resulting preorder?

Exercise 2-68(2)

Find a different monoidal monotone map \(\mathbf{Cost}\xrightarrow{g}\mathbf{Bool}\) from the one in Example 2.65. Using the construction from Proposition 2.64, convert a Lawvere metric space into two different preorders. Find a metric space for which this happens.NOCARD

Solution(1)

Take the two monoidal monotone maps from Exercise 2.44

f yields a discrete preorder whereas g does not.

Enriched functors(5)

V-functor(1)

A \(\mathcal{V}\) functor \(\mathcal{X}\xrightarrow{F}\mathcal{Y}\) between two \(\mathcal{V}\) categories

A function \(Ob(\mathcal{X})\xrightarrow{F}Ob(\mathcal{Y})\) subject to the constraint:

\(\forall x_1,x_2 \in Ob(\mathcal{X}): \mathcal{X}(x_1,x_2) \leq \mathcal{Y}(F(x_1),F(x_2))\)

Linked by

- Bool-functors: referenced

- Bool-functors: referenced

- Cost-functors: referenced

- Natural isomorphism of Bool-categories and preorders: referenced

- Feasibility relationships as Bool-profunctors: referenced

- V-profunctors: referenced

- V-profunctor: referenced

- Solution: referenced

- Companion and conjoint: referenced

- V-adjunction: referenced

- Exercise 4-41: referenced

Bool-functors(1)

Monotone maps, considering the source and target preorders as Bool-categories, are in fact Bool-functors.

The monotone map constraint, that \(x_1\ \leq_X\ x_2 \implies F(x_1)\leq_Y F(x_2)\), translates to the enriched category functor constraint, that \(\mathcal{X}(x_1,x_2) \leq \mathcal{Y}(F(x_1),F(x_2))\).

Cost-functors(1)

A Cost-functor is also known as a Lipschitz function.

Therefore a Lipschitz function is one under which the distance between any pair of points does not increase.

Exercise 2-73(2)

The concepts of opposite/dagger/skeleton extend from preorders to \(\mathcal{V}\) categories.

Recall an extended metric space \((X,d)\) is a Lawvere metric space with two extra properties.

Show that a skeletal dagger Cost-category is an extended metric space

Solution(1)

The skeletal dagger cost category has a set of objects, \(Ob(\mathcal{X})\) which we can call points.

For any pair of points, we assign a hom-object in \([0,\infty]\) (we can call this a distance function).

Skeletal property enforces the constraint \(d(x,y)=0 \iff x=y\).

The second enriched category property enforces the triangle inequality.

Because we have a dagger category, our distance function is forced to be symmetric.

Just like the information of a preorder is discarded (to yield a set) when we only consider skeletal dagger preorders, information must be discarded from Cost-categories to yield a Lawvere metric space.

Linked by

Product V-categories(6)

V-category product(1)

The \(\mathcal{V}\) product of two \(\mathcal{V}\) categories, \(\mathcal{X} \times \mathcal{Y}\)

This is also a \(\mathcal{V}\) category with:

\(Ob(\mathcal{X}\times\mathcal{Y}) := Ob(\mathcal{X})\times Ob(\mathcal{Y})\)

\((\mathcal{X} \times \mathcal{Y})((x,y),(x',y')) := \mathcal{X}(x,x') \otimes \mathcal{Y}(y,y')\)

Linked by

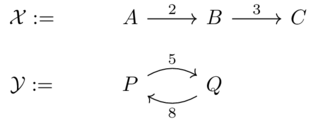

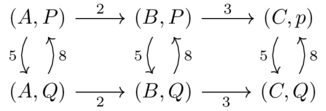

Cost-category product(1)

Let \(\mathcal{X}\) and \(\mathcal{Y}\) be the Lawvere metric spaces (i.e. Costcategories) defined by the following weighted graphs.

The product can be represented by the following graph:

The distance between any two points \((x,y),(x',y')\) is given by the sum \(d_X(x,x)+d_Y(y,y)\).

We can also consider the Cost-categories as matrices

\(\mathcal{X}\) A B C A 0 2 5 B \(\infty\) 0 3 C \(\infty\) \(\infty\) 0 \(\mathcal{Y}\) P Q P 0 5 Q 8 0 \(\mathcal{X}\times\mathcal{Y}\) (A,P) (B,P) (C,P) (A,Q) (B,Q) (C,Q) (A,P) 0 2 5 5 7 10 (B,P) \(\infty\) 0 3 \(\infty\) 5 8 (C,P) \(\infty\) \(\infty\) 0 \(\infty\) \(\infty\) 5 (A,Q) 8 10 13 0 2 5 (B,Q) \(\infty\) 8 11 \(\infty\) 0 3 (C,Q) \(\infty\) \(\infty\) 8 \(\infty\) \(\infty\) 0 Can view this as a 2x2 grid of 3x3 blocks: each is a \(\mathcal{X}\) matrix shifted by \(\mathcal{Y}\).

Exercise 2-75(2)

Let \(\mathcal{X} \times \mathcal{Y}\) be the \(\mathcal{V}\)-product of two \(\mathcal{V}\) categories.

Check that for every object we have \(I \leq (\mathcal{X} \times \mathcal{Y})((x,y),(x,y))\)

Check that for every three objects we have:

\((\mathcal{X} \times \mathcal{Y})((x_1,y_1),(x_2,y_2)) \otimes (\mathcal{X} \times \mathcal{Y})((x_2,y_2),(x_3,y_3)) \leq (\mathcal{X} \times \mathcal{Y})((x_1,y_1),(x_3,y_3))\)

Solution(1)

By axioms of \(\mathcal{V}\) categories: \(I \leq \mathcal{X}(x,x')=xx\) and \(I \leq \mathcal{Y}(y,y')=yy\)

By monotonicity: \(I \leq xx \land I \leq yy\) implies \(I = I \otimes I \leq xx \otimes yy\).

By the definition of a product category this last term can be written as \((\mathcal{X} \times \mathcal{Y})((x,y),(x,y))\)

By axioms of \(\mathcal{V}\) categories: \(\mathcal{X}(x_1,x_2) \otimes \mathcal{X}(x_2,x_3) \leq \mathcal{X}(x_1,x_3)\) and \(\mathcal{Y}(y_1,y_2) \otimes \mathcal{Y}(y_2,y_3) \leq \mathcal{Y}(y_1,y_3)\)

Therefore, by monotonicity, we have \((\mathcal{X}(x_1,x_2) \otimes \mathcal{X}(x_2,x_3)) \otimes (\mathcal{Y}(y_1,y_2) \otimes \mathcal{Y}(y_2,y_3)) \leq \mathcal{X}(x_1,x_3) \otimes \mathcal{Y}(y_1,y_3)\)

Desugaring the definiton of the hom-object in \(\mathcal{X}\times\mathcal{Y}\), the property we need to show is that \((\mathcal{X}(x_1,x_2) \otimes\mathcal{Y}(y_1,y_2)) \otimes (\mathcal{X}(x_2,x_3) \otimes\mathcal{Y}(y_2,y_3)) \leq (\mathcal{X}(x_1,x_3) \otimes\mathcal{Y}(y_1,y_3))\)

Given the associativity and commutitivity of the \(\otimes\) operator, we can rearange the order and ignore parentheses for the four terms on the LHS. Do this to yield the desired expression.

Exercise 2-78(2)

Consider \(\mathbb{R}\) as a Lawvere metric space, i.e. as a Cost-category.

Form the Cost-product \(\mathbb{R}\times\mathbb{R}\).

What is the distance from \((5,6)\) to \((-1,4)\)?

Solution(1)

The distance is the Manhattan/\(L_1\) distance: \(|5-(-1)| + |6-4| = 8\)

Computing presented V-categories with matrix mult(33)

Monoidal closed preorders(11)

The term closed in the context of symmetric monoidal closed preorders refers to the fact that a hom-element can be constructed from any two elements (the preorder is closed under the operation of "taking homs").

Consider the hom-element \(v \multimap w\) as a kind of "single use v to w converter"

a and v are enough to get to w iff a is enough to get to a single-use v-to-w converter.

One may read

Closed SMP(1)

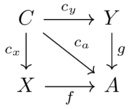

A symmetric monoidal preorder \(\mathcal{V}:=(V,\leq,I,\otimes)\) is symmetric monoidal closed (or just closed)